2. Related Work

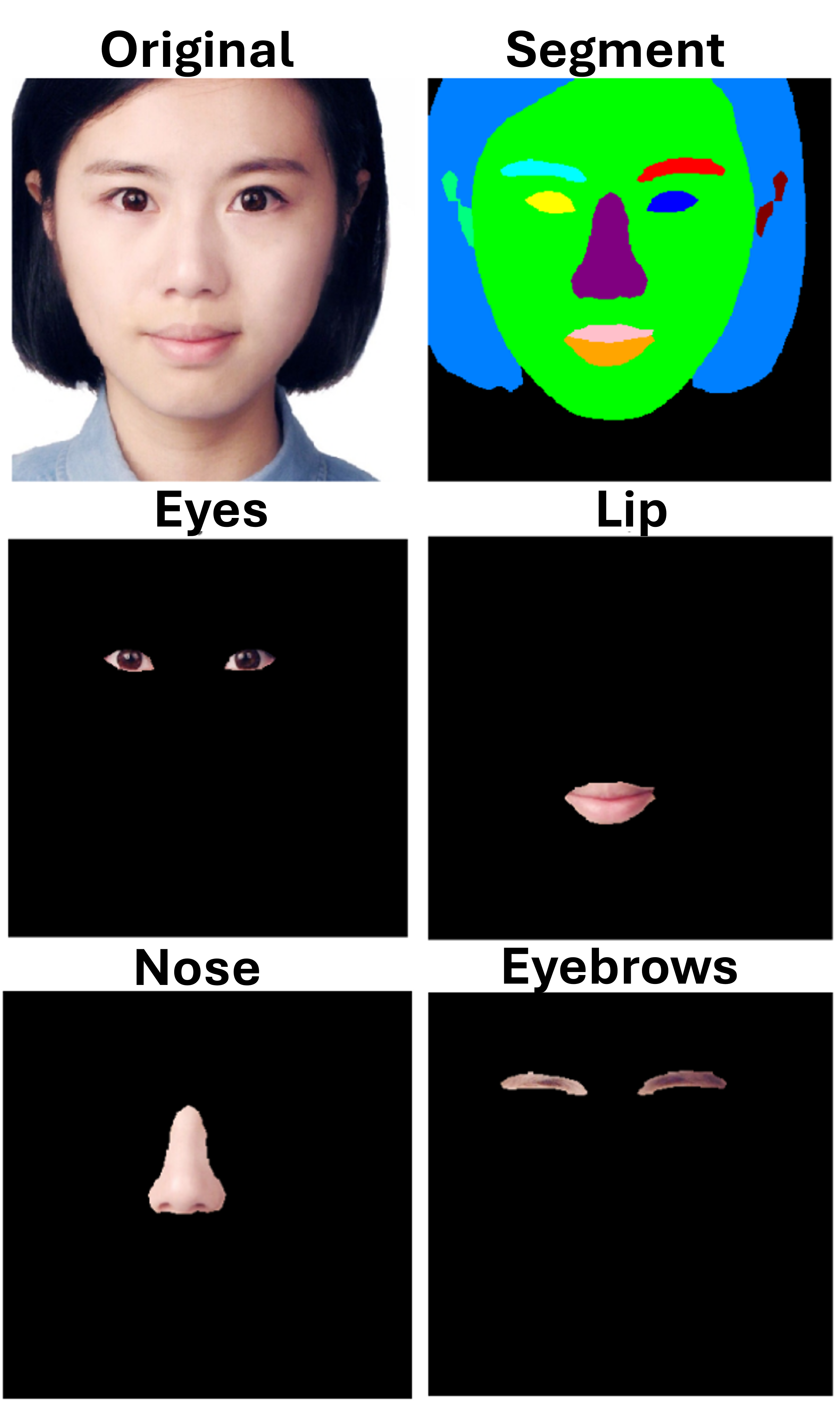

Previous approaches to facial makeup transfer can be broadly categorized into pixel-level transformations and neural network-based techniques. Compared to general style transfer tasks, makeup transfer has its unique challenges originating from the fact that makeup is applied locally around facial keypoints (nose, eyes, eyebrows, etc).

2.1 Pixel-Level Transformations

Early methods often relied on image blending [Project3] or histogram matching to transfer makeup styles. These methods typically fail to handle spatial variations in makeup styles, such as gradients in eye shadow or contours, resulting in unnatural or inconsistent results.

2.2 Neural Network-Based Techniques

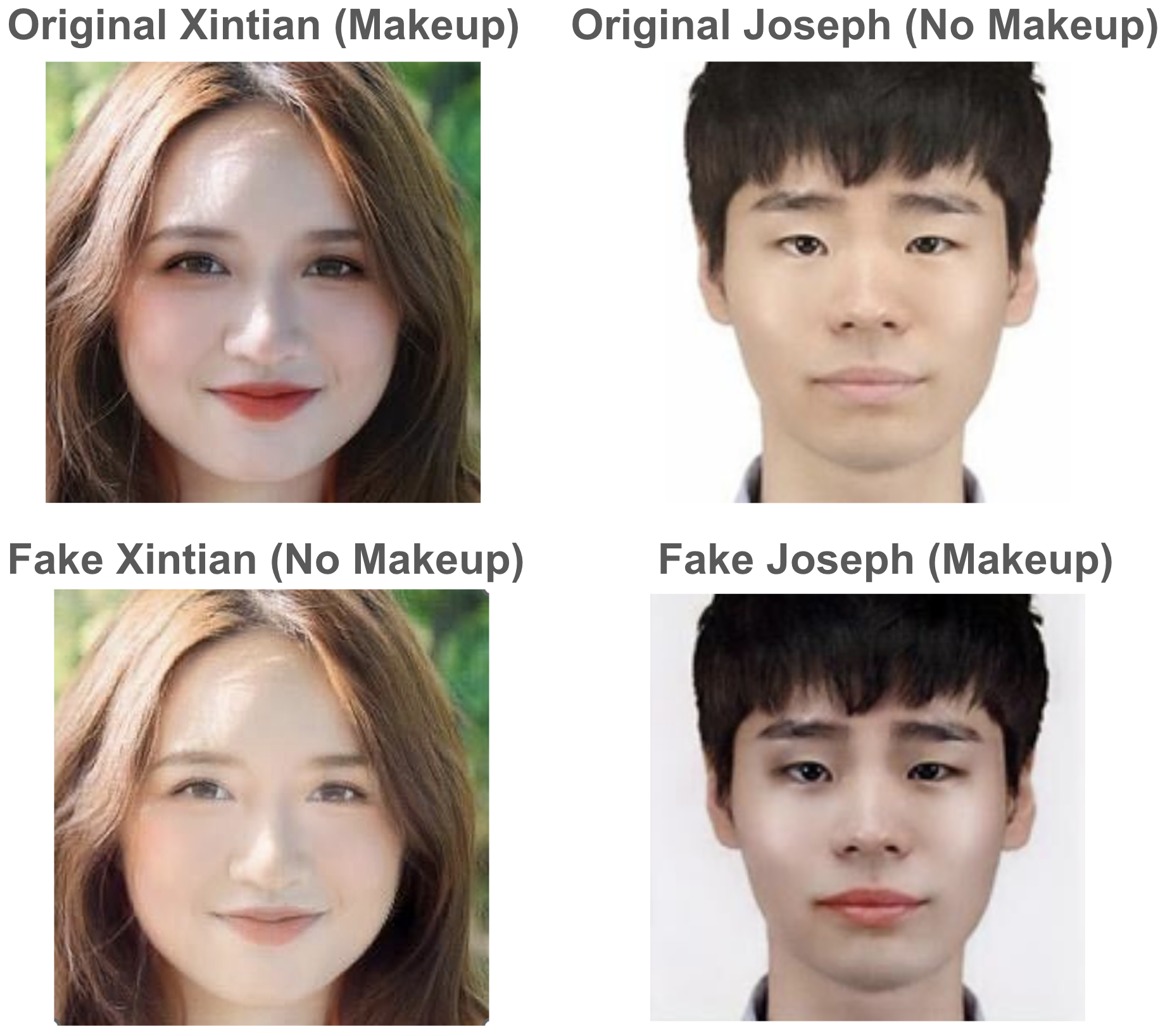

A generative adversarial network (GAN) has two parts: the generator learns to generate plausible data and the discriminator learns to distinguish the generator's fake data from real data. Existing GAN-based techniques for makeup transfer often fail to disentangle makeup styles from facial features, leading to unintended modifications in the identity of the target image. Furthermore, these methods struggle to retain subtle details, such as skin texture or eye shapes, especially when transferring intricate makeup styles.

This project builds on the BeautyGAN framework which leverages a dual-input GAN architecture to separate facial structure and makeup style effectively. Specifically, we focus on and the facial identity preservation, transfer accuracy, and reversibility.

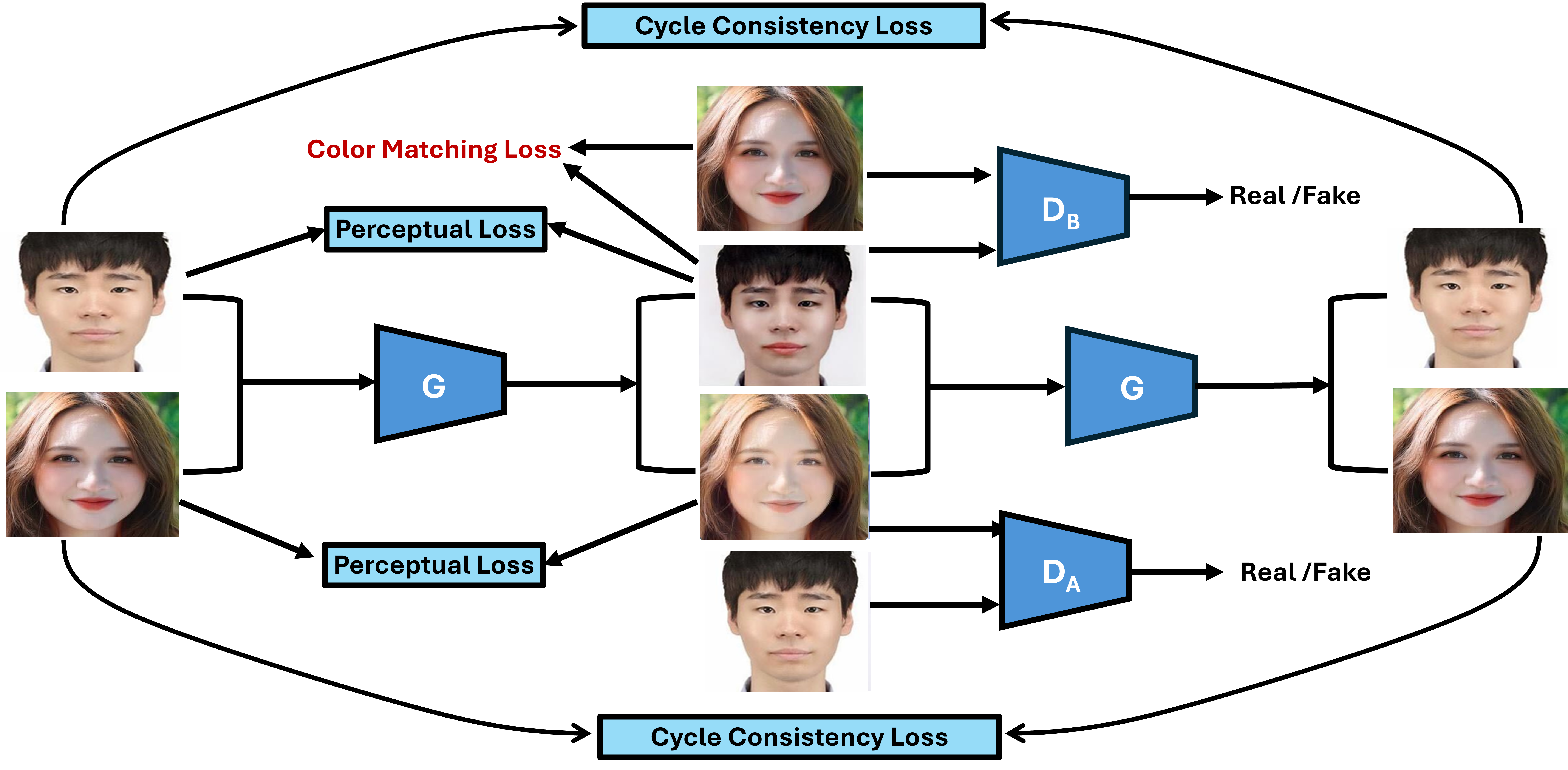

In addition to an adversarial loss, a cycle consistency loss guides the model to generate a reversible modification, and a perceptual loss term preserves high-level semantic features and content structure of the input images. There is also an additional loss term specifically designed for makeup transfer task aimed at matching the texture and color distribution of key facial regions.

As illustrated in the figure below, like common GAN frameworks, we employ an architecture that consists of one generator \( G \) and two discriminators, one performing on the non-makeup image domain and another performing on the makeup image domain.

2.3 Additional Loss for Makeup Task

The primary purpose of makeup is to modify the color information (lipsticks, shadows) while secondary effects such as texture and edges are also important consideration point for cosmetics consumers. By breaking up the makeup into several individual regions-lips, eyebrows, skin tones, etc.-and comparing the source image and generated image at those regions in terms of color distribution and texture, we can systematically establish the makeup loss.

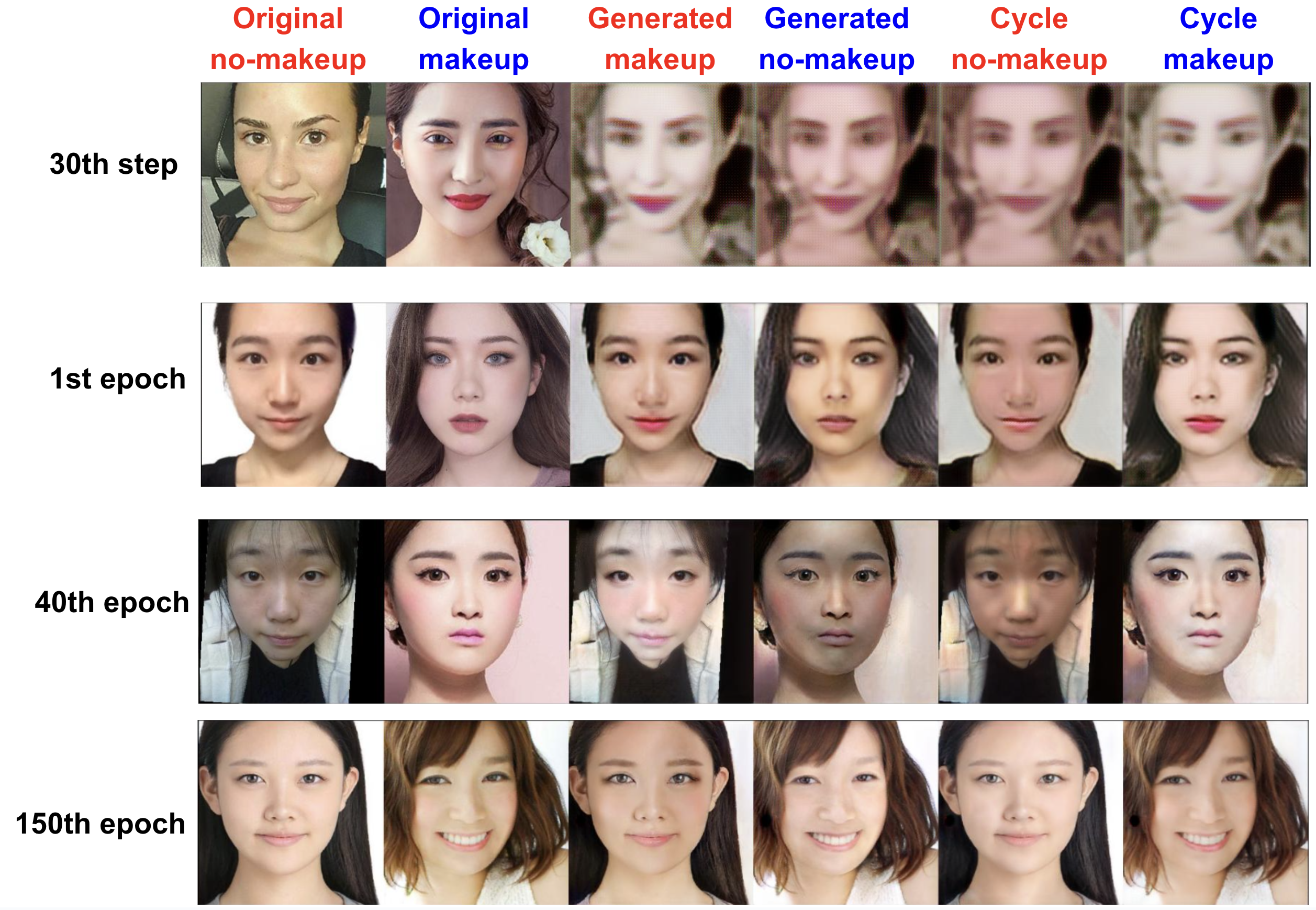

2.4 Implementation Details

We implement the architecture that includes a StyleGAN-based generator for higher resolution and realism, with the addition of two discriminators to enforce both global and local consistency. The training is performed on face datasets that come with binary makeup / non-makeup classifcation label and possibly augmented with additional high-resolution facial images for diversity. Exploratory hyperparameter tuning are performed on the weightings of loss metrics as well as a set of training parameters.

2.5 Architecture Detail

The facial makeup transfer system builds on a modified BeautyGAN framework and consists of a single generator (\( G \)) and two discriminators (\( D_A \), \( D_B \)).

2.5.1 Generator

A dual-input generator starts with two downsampling convolution blocks, one encoding non-makeup images and another encoding makeup images, followed by a concatenation of encodings along the channel dimension. Concatenated encodings go through repeated residual blocks, up-sampled, and passed to two convolution blocks which decode images. The generator is designed to learn the makeup style transfer between two images. Separate encoding / decoding blocks are employed since two images are on the different domain, one on the makeup and one on the non-makeup. The total number of trainable parameters in the generator is 9.2M.

2.5.2 Discriminator

Two discriminators are employed, each specialized at discriminating the makeup image and non-makeup image, respectively. A discriminator is consisted of sequential convolution layers which downsample the input by a factor of 2 and doubles the number of channels. A spectral normalization layer is inserted between convolution layers for training stabilization. The final convolution layer maps the input to a single channel, thereby transforming a 3-channel input image of \( 256 \times 256 \) to a patch of \( 30 \times 30 \). The fake / real classification is not done in a binary fashion but based on a number of patches in 1 (real) or 0 (fake).

2.6 Loss Detail

At each training step, discriminator weights are updated followed by a generator update. Discriminator loss is calculated from four images, two original images and two fake images (four fan-ins to \( D_A \) and \( D_B \) in previous architecture figure. Generator loss is a linear combination of the following losses:

consistency loss. When the fake images are passed again to the generator, the output should be mapped to the original image domain, ideally mapped back to the original images.

Perceptual loss. Even after makeup transfer, the generated face should preserve general facial structure (basic shape of facial keypoints and the overall structure). Therefore we employ a pretrained VGG-16 and use the intermediate feature (activation of layer 17, in particular) and compare the feature of original vs. generated images (two Perceptual Loss in Figure1).

Color matching loss. This loss ensures the transferred makeup aligns with the source by matching the color distribution of facial regions (e.g., lips, eyes, skin). Histogram matching is used to align color intensity and tone, preserving gradients and textures for realistic results. These loss components are summed with weights \( \lambda_{cycle} \), \( \lambda_{percep} \), \( \lambda_{skin} \), \( \lambda_{lip} \), \( \lambda_{eye} \) for the best training result.